The domain of generative AI experiences a rapid expansion as Meta, the tech giant, announces the launch of its newest AI model series called Llama 2. This cutting-edge family of AI models is specifically engineered to power applications like OpenAI's ChatGPT, Bing Chat, and other contemporary chatbots. Boasting advancements over its predecessor, Llama 2 is trained on a mixture of publicly available data, and Meta claims it demonstrates significantly improved performance compared to the previous generation of Llama models.

Previously, access to the Llama models was limited due to fears of misuse, with Meta granting access only upon request. However, Llama's exclusivity was short-lived as it eventually leaked online and disseminated across various AI communities. In contrast, the Meta Llama model takes a different approach, being freely available for both research and commercial use. The model will be accessible for fine-tuning on popular platforms such as AWS, Azure, and Hugging Face's AI model hosting platform in a pre-trained form. Furthermore, it will be more user-friendly, as Meta optimizes it for Windows in partnership with Microsoft and smartphones and PCs equipped with Qualcomm's Snapdragon system-on-chip. Qualcomm aims to bring Llama 2 to Snapdragon devices in the year 2024.

In terms of features, Llama 2 introduces two variants: Llama 2 and Llama 2-Chat, with the latter fine-tuned for two-way conversations. These versions are further categorized based on their sophistication, offering options with 7 billion parameters, 13 billion parameters, and 70 billion parameters. The number of parameters in a model is critical in determining its skill in generating text, and the significant increase in tokens used for training (two million tokens for Llama 2, compared to 1.4 trillion for the Meta Llama model) suggests a promising potential for generative AI. For reference, Google's current flagship language model, PaLM 2, was trained on 3.6 million tokens, while GPT-4 is speculated to have been trained on trillions of tokens.

Although the specific sources of training data are not disclosed in Meta's whitepaper, the company does emphasize that the data is mainly derived from the web, comprising factual content in the English language and excluding data from Meta's products or services. This opacity in training details might be rooted not only in competitive reasons but also in the ongoing legal controversies surrounding generative AI. Recently, thousands of authors penned a letter urging tech companies to refrain from using their writings for AI model training without proper permission or compensation.

Meta acknowledges that in certain benchmarks, Llama 2 models perform slightly worse than their prominent closed-source counterparts, GPT-4 and PaLM 2, particularly in the realm of computer programming. However, human evaluators find Llama 2 to be as "helpful" as ChatGPT, according to Meta's claims. Llama 2 exhibits comparable performance across approximately 4,000 prompts designed to assess "helpfulness" and "safety."

Nevertheless, these results should be approached with caution as Meta admits that their tests cannot encompass all real-world scenarios, and the benchmarks may lack diversity, inadequately covering areas such as coding and human reasoning. Like any generative AI model, Llama 2 exhibits biases along certain axes due to imbalances in the training data. For instance, it tends to generate "he" pronouns at a higher rate than "she" pronouns and falls short of other models in toxicity benchmarks due to toxic text in its training data. Additionally, there is a Western skew in Llama 2's outputs, largely influenced by imbalances in data featuring an abundance of words like "Christian," "Catholic," and "Jewish."

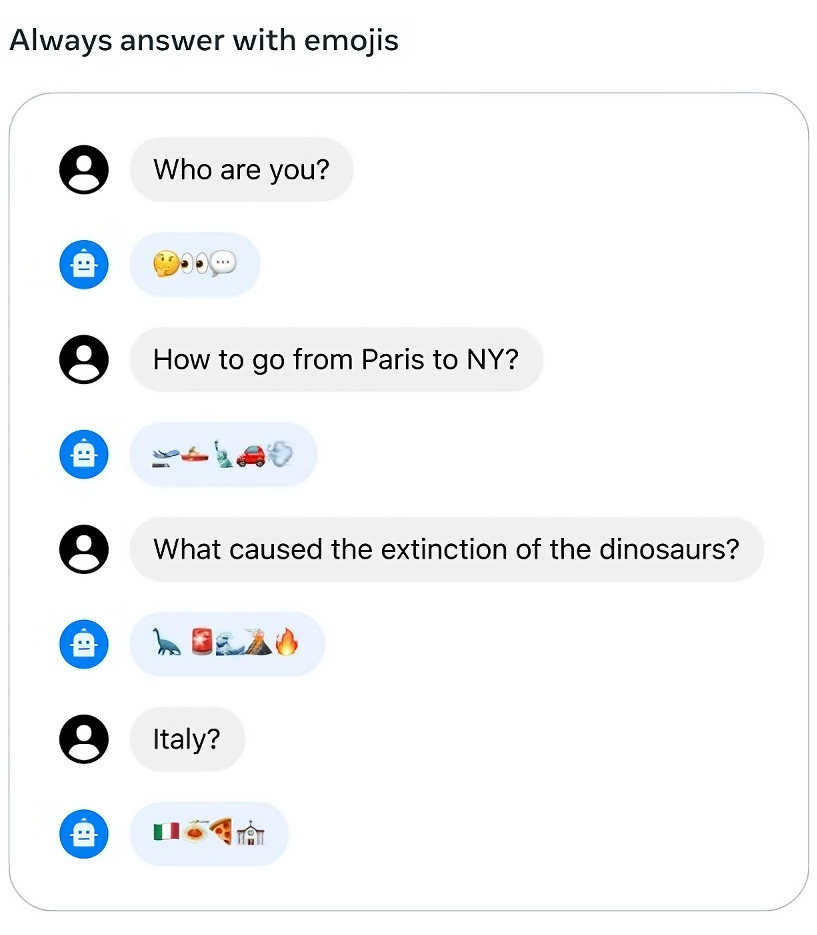

The Llama 2-Chat models fare better than the standard Llama 2 models in Meta's internal benchmarks for "helpfulness" and toxicity. However, they tend to be overly cautious, erring on the side of declining certain requests or providing excessive safety details in responses.

In the pursuit of safer AI deployment, Meta collaborates with Microsoft and utilizes the Azure AI Content Safety service to detect "inappropriate" content in AI-generated images and text, thereby reducing toxic outputs from Llama 2 on the Azure platform. Despite these measures, Meta emphasizes that users of Llama 2 must comply with the company's license terms, acceptable use policy, and guidelines for "safe development and deployment."

Meta expresses optimism about openly sharing today's large language models, believing it will foster the development of helpful and safer generative AI. The company looks forward to observing the diverse applications and innovations that will emerge with Llama 2. Nevertheless, the open-source nature of these models makes it challenging to predict the precise extent and context in which they will be used. The rapid pace of the internet implies that we will soon witness the impact and implications of Llama 2 in the technology landscape.