Stability AI, renowned for its stable diffusion text-to-image generative AI models, has expanded its horizons with the release of the StableLM Zephyr 3B. This latest addition, a 3 billion parameter large language model (LLM), is tailored for chat-centric applications, boasting text generation, summarization, and content personalization prowess.

Unlike its bulkier 7 billion parameter counterparts, the Zephyr 3B's reduced size facilitates deployment across a broader spectrum of hardware. This optimization not only minimizes the resource footprint but also ensures swift responses, particularly excelling in Q&A and instruction-following tasks. Emad Mostaque, CEO of Stability AI, emphasized the model's superiority, revealing that it outperforms larger models like Meta's Llama-2-70b-chat and Anthropric's Claude-V1, despite being only 40% of their size.

Positioned as an extension of the existing StableLM 3B-4e1t model, the Zephyr 3B embodies a design philosophy inspired by HuggingFace's Zephyr 7B model. Drawing upon the open-source MIT license, the Zephyr AI models from HuggingFace serve as intelligent assistants, setting the stage for Stability AI's innovative leap.

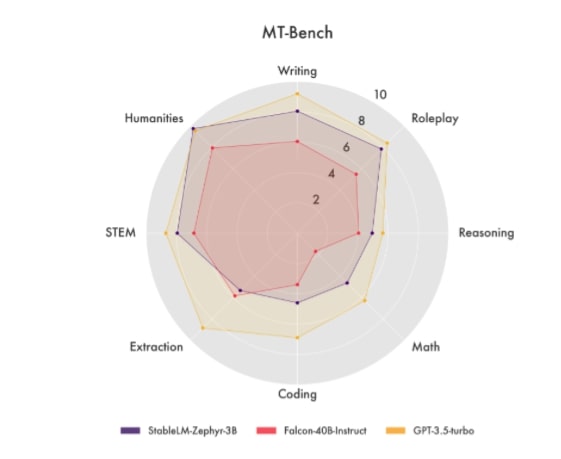

The Zephyr model adopts the Direct Preference Optimization (DPO) training approach, a technique previously associated with larger 7 billion parameter models. Embracing DPO for a smaller 3 billion parameter size distinguishes StableLM Zephyr as a trailblazer in the field. Stability AI harnessed the power of DPO in conjunction with the UltraFeedback dataset from the OpenBMB research group, consisting of over 64,000 prompts and 256,000 responses. This amalgamation, coupled with optimized data training, positions StableLM Zephyr 3B as a performance powerhouse, as evidenced by its surpassing competitors in the MT Bench evaluation.

So, if you are interested in AI, programming, and technologies, discover Atlasiko news!