Docker Inc., the renowned creator of open-source Docker container technology, made a significant foray into the realm of artificial intelligence (AI) during the Dockercon conference in Los Angeles. Docker Inc. unveiled a series of groundbreaking initiatives aimed at expediting the development of generative AI applications, leveraging Docker containers as the primary deployment method.

At the heart of these efforts is the introduction of the new GenAI stack, a fusion of Docker technology with Neo4j's graph database, LangChain's model chaining technology, and Ollama for executing large language models (LLMs). This new Docker AI product offers developers an integrated platform to harness AI-powered insights and guidance for container-based development.

Docker's significance in today's development ecosystem cannot be overstated. Docker CEO, Scott Johnston, pointed out, "For four years running, Stack Overflow’s community of developers has voted us number one most wanted, number one most loved developer tool," highlighting the platform's popularity among the global developer community, which now boasts 20 million monthly active developers.

While Docker containers have become ubiquitous for AI deployment, there's an evident need to simplify the development of GenAI applications. GenAI applications typically require core elements, including a vector database (now available through Neo4j), and an LLM (provided by Ollama), and often entail multi-step processes, where LangChain's framework comes into play.

The Docker GenAI stack aims to streamline the configuration of these diverse components within containers, making GenAI application development more accessible. It caters to various use cases, including building support agent bots with retrieval augmented generation (RAG) capabilities, creating Python coding assistants, and automating content generation.

Johnston emphasized, "It's pre-configured, ready to go, and developers can start coding and experimenting to help get the ball rolling." The entire stack is designed for local deployment and is available free of charge. For those requiring deployment and commercial support, Docker and its partners will offer options.

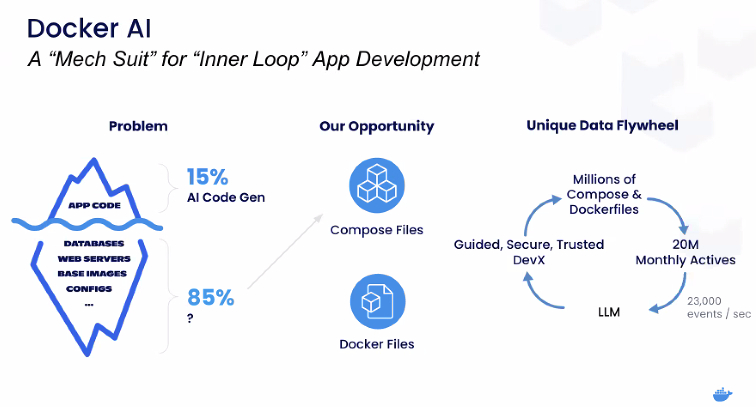

In a market brimming with GenAI developer tools, Docker is entering the arena with Docker AI, a unique offering that transcends the conventional GenAI terminology. Instead of referring to Docker AI as a "copilot," Docker is introducing it as a "mech suit." The concept is simple: Docker AI equips developers with greater power and strength to accomplish tasks effectively.

Docker AI draws from Docker's proprietary data, sourced from millions of Dockerfiles, compose files, and error logs. It seamlessly integrates into developers' workflows, assisting when errors occur. This tool not only highlights potential fixes within the development environment but also allows developers to test and validate these fixes before committing changes. The overarching objective is to enhance the developer experience when troubleshooting and resolving issues.

Johnston noted that while tools like GitHub Copilot are valuable, Docker AI is finely tuned to facilitate container development. "It has been trained on a rich proprietary stream of Docker data that other LLMs don't have access to," he emphasized.

As Docker Inc. plunges into the AI landscape, its GenAI stack and Docker AI are poised to make waves, promising to simplify AI development and bolster the capabilities of developers worldwide. These initiatives align with Docker's ongoing commitment to supporting the global developer community.