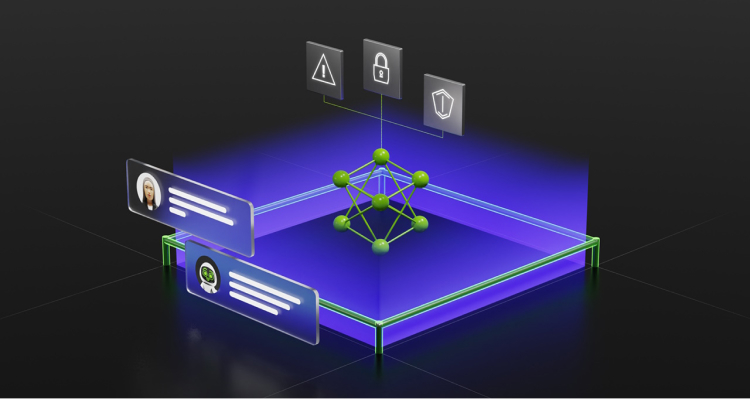

As AI continues to be integrated into various platforms and apps, developers face the challenge of managing AI's tendency to generate inaccurate or inappropriate content. To address this issue, Nvidia recently introduced "NeMo Guardrails," an open-source censorship solution designed for apps powered by large language models. The software aims to provide a one-size-fits-all solution that can be easily implemented in popular AI toolkits like LangChain.

NeMo utilizes an AI-specific subsystem called Colang to allow developers to define restrictions on AI output. By employing NeMo, chatbots can be guided to remain on-topic and avoid producing misleading information, offensive responses, or even malicious code. Nvidia has already successfully implemented the solution in collaboration with web application company Zapier.

According to Nvidia VP of Applied Research, Jonathan Cohen, the Guardrails system has been in development for years and has proven compatible with OpenAI's GPT models. NeMo is designed to work with older language models, such as OpenAI's GPT-3, Google's T5, as well as AI image generation models like Stable Diffusion 1.5 and Imagen. It is also confirmed to be compatible with all basic LLMs supported by LangChain, including OpenAI's GPT-4.

However, the effectiveness of open-source guardrails remains uncertain. While companies like OpenAI and Stability AI have attempted to assure customers that safeguards against inappropriate content are in place, users often have to rely on the word of these companies. Furthermore, NeMo may not be a perfect solution, as demonstrated by Microsoft's Bing AI and Snapchat's "My AI" chatbot, both of which have produced controversial outputs despite attempts to restrict certain content.

The AI industry's approach to managing AI-generated content was based on releasing solutions in a "beta" format, while companies like Google and Microsoft focusing on "responsible" AI development. Modern AI chatbots have a unique challenge due to their ability to produce harmful or false content without understanding the implications. To prevent AI from acting inappropriately, developers must create robust safeguards, much like a cage around a dangerous animal.

Nvidia's introduction of NeMo Guardrails also highlights the company's vested interest in promoting its AI software suite for businesses. As a major player in the AI hardware space, with over 90% of the global market share in AI training chips, Nvidia stands to benefit from increased adoption of AI solutions.

Summing up, the company's efforts to address AI-generated content issues may further solidify its dominance in the industry, as competitors like Microsoft seek to develop their own AI training chips.

We propose you read about GitLab’s new function that alerts developers about a potential vulnerability.

Also, you can take a closer look at Microsoft’s and Google’s competition to control generative AI.

Besides, we wrote about the Turing test for a benchmark of ChatGPT’s conversational abilities.