Cornell University researchers have developed a new wearable interface, EchoSpeech, that uses acoustic-sensing and artificial intelligence to recognize up to 31 unvocalized commands based on lip and mouth movements. The energy-saving, wearable interface can be operated on a smartphone and demands only a couple of minutes of user training data for command recognition. Ruidong Zhang, the lead author of the study, which will be introduced at the Association for Computing Machinery Conference on Human Factors in Computing Systems this month, highlights “For people who cannot vocalize sound, this silent speech technology could be an excellent input for a voice synthesizer. It could give patients their voices back”.

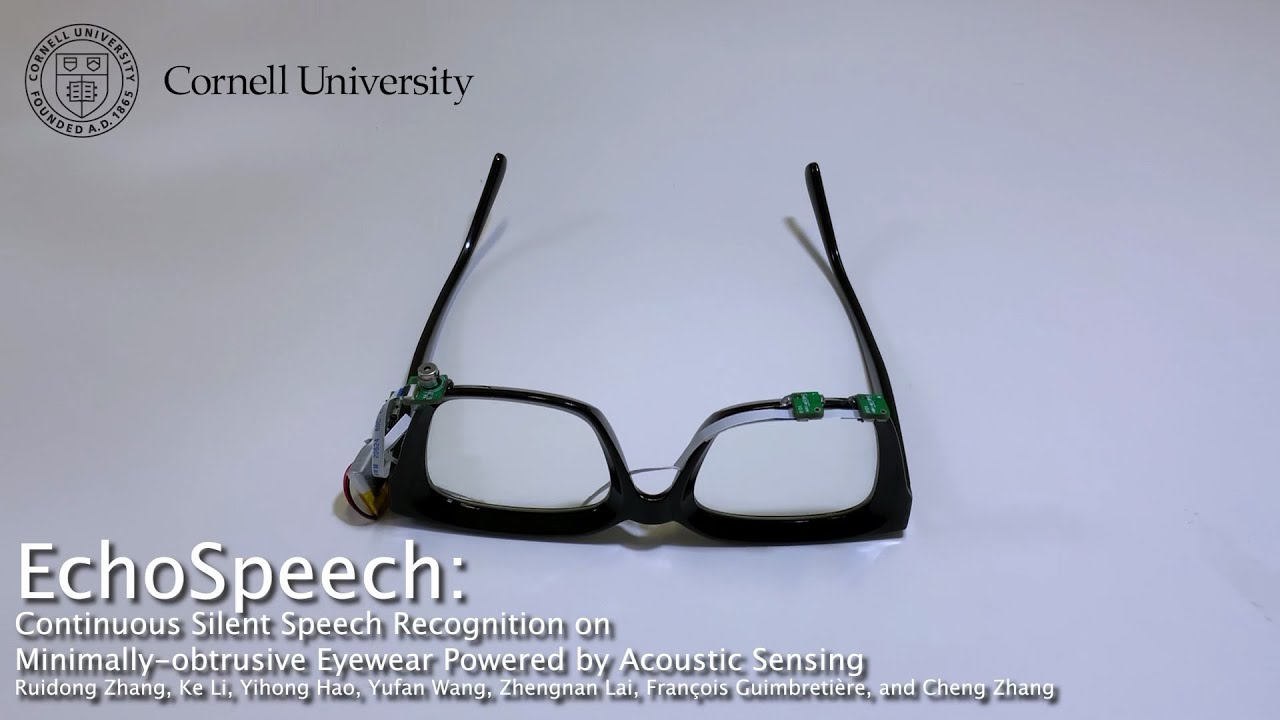

EchoSpeech can be used in various environments where speech is inconvenient or inappropriate, including noisy restaurants or quiet libraries. It can also be paired with a stylus and used with design software such as CAD, importantly decreasing the need for a keyboard and mouse. Furnished with microphones and speakers with size smaller than pencil erasers, the glasses serve as a wearable AI-driven sonar system, sending and receiving sounds across the face and recognizing mouth movements. The technology was analyzed by a deep learning algorithm that determined its accuracy around 95%.

Cheng Zhang - assistant professor of information science and director of Cornell’s Smart Computer Interfaces for Future Interactions (SciFi) Lab - said “We’re moving sonar onto the body”

Also, he explained that existing silent-speech identification technology traditionally relies on a restricted set of pre-decided commands and requires the user to face or wear a camera, which is impractical and even impossible, moreover, it really increases privacy fears. EchoSpeech's technology give the capability to avoid the need for wearable video cameras. It is worth adding, since audio data is smaller than image or video data, it demands low bandwidth to process and it can be transferred to a smartphone through Bluetooth in real-time. François Guimbretière, professor in information science, said, "And because the data is processed locally on your smartphone instead of uploaded to the cloud, privacy-sensitive information never leaves your control."

Summing up, the technology can significantly improve the lives of people with speech disabilities that need more interaction with society. The AI-powered tools added to any device can simplify diverse industries. In such a way patients can get their voices back and enjoy a new life.

You can read our recent news such as Ada’s presentation of a new generative AI-driven suite of tools that will take automation to a new level.

Also, you can be more informed about the latest digital technologies by reading our report where we wrote about how dead loved ones will come alive on your screen.

Besides, we suggest you read our report about the Organoid Intelligence field for biocomputers’ abilities.