Large language models like GPT-4 and Claude have showcased remarkable prowess, but the veil of secrecy surrounding their training data has long raised concerns. The Allen Institute for AI (AI2) is now charting a new course, introducing a colossal text dataset named Dolma, designed to be open, accessible, and subject to scrutiny.

Dolma, an acronym for "Data to feed OLMo's Appetite," is the cornerstone for AI2's ambitious project, the Open Language Model (OLMo). Mirroring the spirit of openness in OLMo, AI2 asserts that the dataset's availability should be equally unobstructed, reflecting a shared commitment within the AI research community.

Representing the inaugural "data artifact" unveiled by AI2 concerning OLMo, Luca Soldaini, from the organization, elucidates the rationale behind Dolma's creation and the meticulous curation process in a comprehensive blog post. He hints at an upcoming detailed paper to delve into the intricacies of the dataset's development.

While entities like OpenAI and Meta divulge partial insights into their dataset specifics, much remains proprietary. This opacity poses challenges for scrutiny, innovation, and ethical considerations. Speculations have even arisen regarding the ethical and legal origins of specific proprietary data, sparking debates about privacy and intellectual property rights.

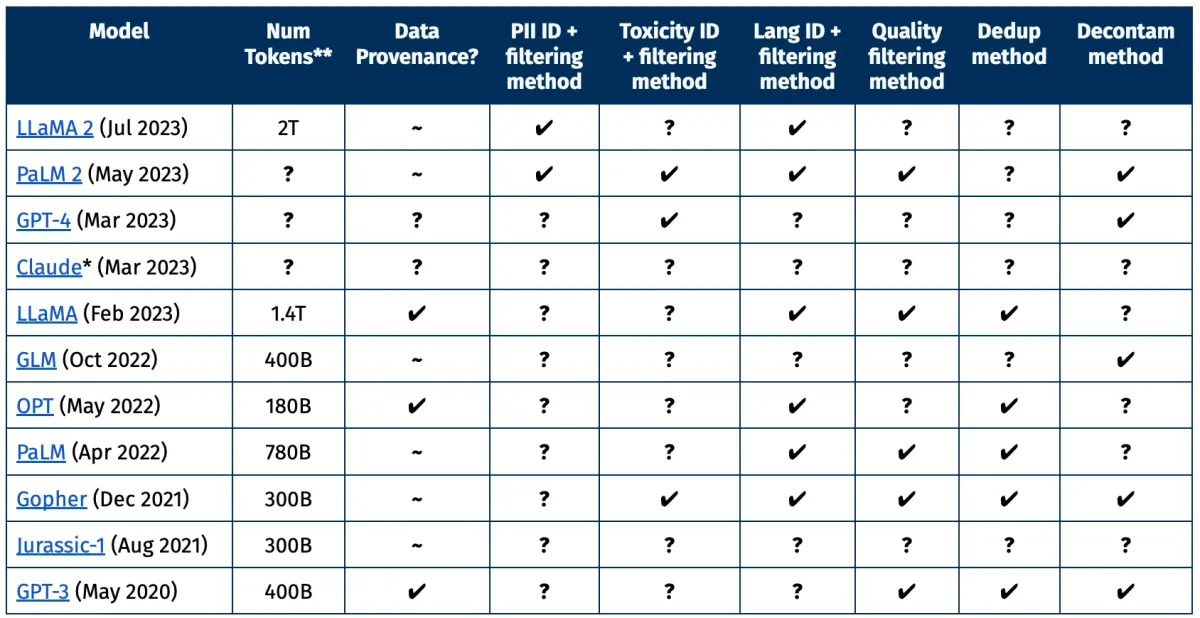

Illustrated through an insightful chart from AI2, it becomes evident that the current disclosure from industry giants only scratches the surface of the information researchers yearn for. Critical details like omissions, rationale for quality distinctions, and redactions of personal details remain concealed, leaving the research community yearning for more.

Although the competitive nature of the AI landscape validates these corporate practices, they inadvertently hinder external researchers' ability to understand, replicate, and improve upon existing models. AI2's Dolma strives to invert this paradigm, offering complete transparency by meticulously documenting sources, processing methods, and even the rationale behind paring it down to original English content.

Dolma isn't the first venture to advocate for open datasets, yet it stakes a claim as the largest in scale, comprising a staggering 3 billion tokens, a metric native to AI content assessment. Moreover, AI2 asserts that Dolma distinguishes itself by adopting a straightforward approach to accessibility and permissions. The dataset embraces the "ImpACT license for medium-risk artifacts," details of which can be explored further.

Prospective users of Dolma are expected to adhere to a set of stipulations, including furnishing contact information, outlining intended use cases, sharing derivative works, and agreeing to the terms of the license. Additionally, users commit not to employ Dolma in contexts such as surveillance or disinformation, aligning with responsible AI use.

For some concerned about inadvertent data inclusions, AI2 offers a dedicated removal request form, assuring personalized attention to rectify specific instances. This feature attests to AI2's commitment to ensuring ethical use and upholding user rights within the framework of the dataset's open nature.

In a domain where innovation often necessitates a trade-off between transparency and competitive advantage, AI2's Dolma signifies a pivotal stride towards fostering openness, shared knowledge, and ethical AI development. As the AI landscape evolves, the significance of such initiatives in shaping responsible AI practices is undeniable.